Building an On-Premises AI Agent Foundation with GBase on NVIDIA Spark

Introduction

In the evolving landscape of artificial intelligence (AI), companies are constantly seeking innovative solutions to improve their operations. Sparticle Co., Ltd., based in Chuo, Tokyo, has introduced the GBase platform, designed to tackle the challenges faced when implementing generative AI in organizations. With the aid of NVIDIA Spark, GBase enables businesses to establish an affordable and effective on-premises AI agent infrastructure. This article delves into the features and benefits of GBase on Spark, why choosing this solution is advantageous, and how it can be effectively utilized within various corporate environments.

GBase on Spark: Overview

GBase on Spark is tailored for on-premises environments, merging large language models (LLMs) and visual language models (VLMs) into a single platform. This innovative framework supports high-accuracy retrieval-augmented generation (RAG) technology, allowing the parsing of handwritten notes, scrawled documents, complex tables, and diagrams. Thanks to the optimization of model performance through quantization and ARM platform enhancement, GBase operates smoothly on the NVIDIA Spark, ensuring that corporate knowledge is seamlessly utilized.

The NVIDIA Spark AI Server

NVIDIA Spark is a compact AI server that can be placed on a desk, making it more accessible for enterprises compared to conventional rack-mounted servers. Its design ensures minimal noise pollution, allowing it to be used in typical office conditions without disturbance. Organizations can set up GBase on Spark without needing specialized server rooms or climate controls, providing a seamless way to implement an LLM server as part of their operations. Moreover, companies can acquire standard models from the market, drastically reducing the lead time often associated with custom server orders.

Why Choose GBase on Spark?

Cost Efficiency

Traditionally, integrating large language models on-premises required substantial investment in dedicated GPU servers, often costing hundreds of thousands of yen for a single unit. GBase on Spark changes this paradigm by allowing organizations to utilize hardware starting from tens of thousands of yen, allowing for potentially reduced costs by as much as 1/20 compared to previous requirements.

Enhanced Functionality on a Single Spark Unit

GBase’s dual structure of LLMs and VLMs allows for versatile handling of complex documents, processing materials such as scanned documents and manuals efficiently. The integrated features ensure users gain access to support capabilities for internal FAQs, table RAG, and image analysis all from a single Spark unit.

Data Security and Privacy

Importantly, GBase on Spark facilitates on-premises data handling wherein LLM inference and RAG searches occur entirely within the internal network. This nullifies the necessity of transferring confidential information to the cloud, thereby meeting zero-trust and data sovereignty requirements, which is especially crucial for sectors such as finance, public service, and manufacturing.

Low Maintenance Operations

The high-quality design of the Spark AI server considerably simplifies operational maintenance. GBase includes a web-based management interface along with automatic log collection and monitoring functions, which eliminates the need for a dedicated infrastructure team. This can empower existing IT personnel to manage AI operations effectively, maintaining operational overhead at a minimum.

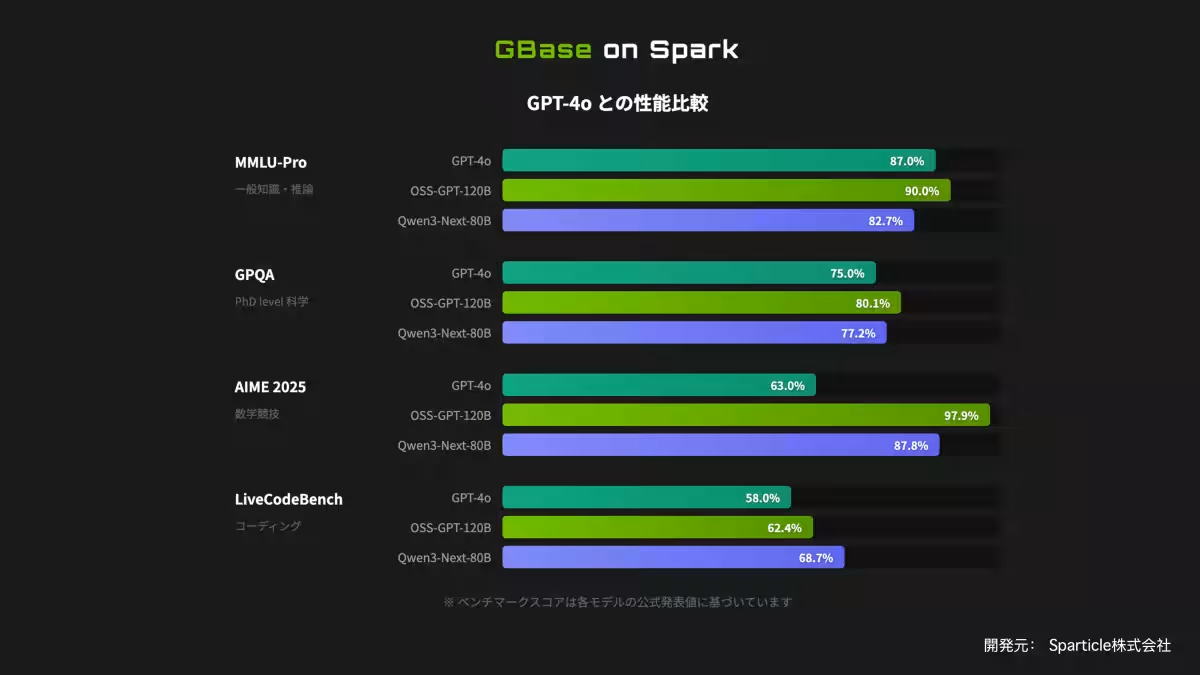

Unparalleled Capabilities

With GBase on Spark, users can leverage next-generation open-source LLMs that enable simultaneous connections for dozens of users, supporting a 32K context under 10 seconds of turnaround time.

Key Functionalities Offered by GBase on Spark

- - Robust Document Handling: Support for diverse document types, enabling analysis of handwritten, scanned, and diagram-filled content.

- - High-Precision Chat and Citation Reporting: Answers with clear sources, ensuring the reliability of responses.

- - Q&A Knowledge Building and Continuous Learning: Automatic expansion of knowledge via utilization.

- - Security Features: Provides access control, usage log recording, and auditing capabilities.

Addressing Significant Challenges

By enabling organizations to construct on-premises LLM and RAG infrastructures starting from just one Spark unit, GBase reduces the initial investment threshold significantly. The technology equips organizations with sufficient resources in limited environments to meet internal knowledge demands, thus cutting down the time spent searching for necessary information.

Use Cases in Business Operations

GBase on Spark shines in supporting functions like internal help desks and front-end customer service. It can assist staff in rapidly responding to inquiries, enhancing efficiency without burdening team members. Additionally, upcoming features for automatic document creation will allow for seamless integration of knowledge accumulation into routine documentation tasks.

Moving Forward

As businesses seek to improve their AI capabilities, GBase on Spark offers an accessible and efficient solution for AI integration, allowing companies to enhance their operations and knowledge retention processes safely and efficiently. Interested organizations are encouraged to start with free consultations to explore tailored implementations within their operations. Full automated solutions, like meeting minutes generation from audio or automatic knowledge-based document creation, can be realized as part of future enhancements.

For further details on the GBase platform and to arrange consultations, please visit the official Sparticle website.

Topics Consumer Technology)

【About Using Articles】

You can freely use the title and article content by linking to the page where the article is posted.

※ Images cannot be used.

【About Links】

Links are free to use.