The Surge of Shadow AI Risks Amidst Rapid Growth of Generative AI Platforms

Rise of Shadow AI Risks

The latest findings from Netskope Threat Labs indicate a striking 50% increase in the use of generative AI platforms among corporate end-users within a mere three months, from early March to the end of May 2025. As organizations strive to leverage SaaS generative AI applications and AI agents securely, the unauthorized use of AI applications by employees, known as 'shadow AI', is increasingly proliferating. It is estimated that over half of the AI applications in use at companies today fall under shadow AI, thereby posing new security risks.

The Rise of Generative AI Platforms

Generative AI platforms are emerging as foundational infrastructure tools for organizations to build custom AI applications and agents. Their user-friendliness and flexibility make them the fastest-growing category within the shadow AI landscape. Notably, the number of users utilizing generative AI platforms surged by 50% in the span of three months, culminating at the end of May 2025. These platforms enable direct integration between corporate data storage and AI applications, enhancing operational efficiency. However, this expansion concurrently generates fresh security vulnerabilities within company data, stressing the necessity for robust data loss prevention (DLP) practices, continuous monitoring, and user awareness training. Network traffic associated with the use of generative AI has also skyrocketed, increasing by 73% compared to the preceding quarter. By May, 41% of organizations reported using at least one generative AI platform, with Microsoft Azure OpenAI (29%), Amazon Bedrock (22%), and Google Vertex AI (7.2%) leading the pack.

Netskope Threat Labs' Director, Ray Canzanese, expressed concern regarding the rapid rise of shadow AI. Organizations are now under pressure to identify who is leveraging generative AI to create novel applications and where these developments are occurring. While security teams are reluctant to stifle employee innovation, the use of AI continues to proliferate. Protecting these innovations necessitates a reevaluation of AI application controls and an evolution towards DLP policies that incorporate real-time user coaching mechanisms.

Expanding AI Utilization in On-Premises Environments

The adoption of localized generative AI through on-premises GPU sources is opening up a variety of options for rapid innovation utilizing AI. This includes the development of on-premises tools that link with SaaS generative AI applications and platforms. Notably, the usage of large language model (LLM) interfaces is on the rise, with 34% of organizations currently utilizing such interfaces. Ollama (33%) is the predominant choice among these, while alternatives like LM Studio (0.9%) and Ramalama (0.6%) remain limited in scope.

Simultaneously, many employee end-users are testing AI tools, leading to a rapid increase in access to AI marketplaces. For instance, 67% of organizations reported that users are downloading resources from Hugging Face. The anticipation surrounding AI agents is driving this trend forward; data indicates a swift rise in users building AI agents and leveraging agent functionalities in SaaS solutions throughout their organizations. Currently, GitHub Copilot is utilized by 39% of organizations, while 5.5% have users executing agents generated from popular AI agent frameworks on-premises.

Furthermore, on-premises agents are beginning to request more data from SaaS services, with an increase in access to non-browser-based API endpoints. Two-thirds of organizations (66%) are making API calls to api.openai.com, while 13% are doing so to api.anthropic.com.

Rapid Expansion of SaaS Generative AI Applications

Netskope is currently tracking over 1,500 different SaaS generative AI applications, a significant increase from just 317 in February 2025. This surge illustrates the swift release of new applications, with many rapidly being adopted by organizations. On average, organizations are utilizing around 15 generative AI applications, up from 13 in February. The amount of data uploaded monthly to generative AI applications has also grown, rising from 7.7GB to 8.2GB in the preceding quarter.

Corporate users are gravitating towards specialized tools like Gemini and Copilot as these chatbots advance in integration with productivity suites. Consequently, numerous security teams are working on measures to enable secure enterprise-wide usage of these applications and solutions.

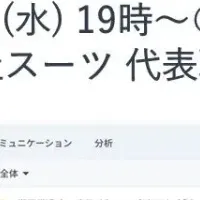

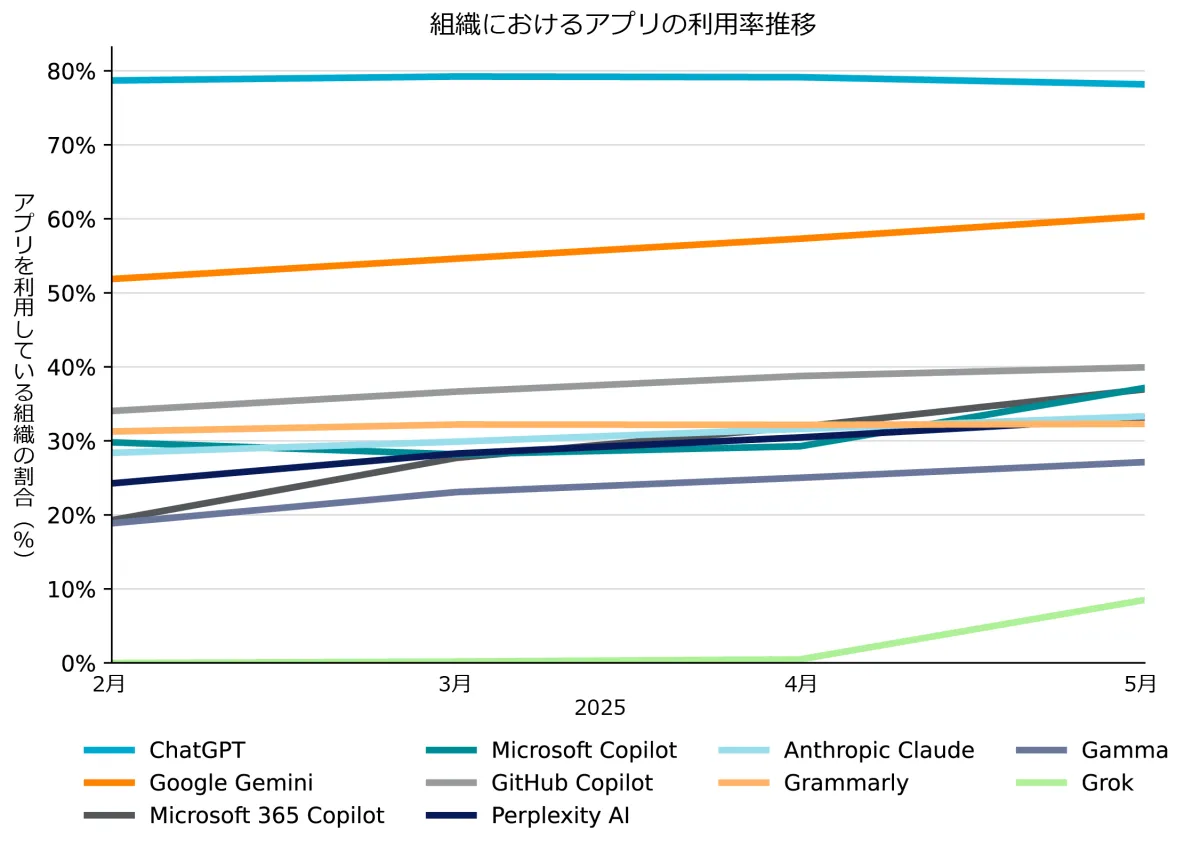

Interestingly, the enterprise utilization rate of the general-purpose chatbot ChatGPT witnessed its first decline since the commencement of surveys in 2023. Among the top 10 generative AI applications within organizations, only ChatGPT saw a decrease in usage since February, while other key applications such as Anthropic Claude, Perplexity AI, Grammarly, and Gamma have all experienced expanded adoption.

Additionally, Grok’s popularity has surged, marking its debut in the top 10 most utilized applications. Despite still being among the top 10 most blocked applications, its block rate has shown a downward trend as organizations increasingly opt for granular controls and monitoring capabilities.

Ensuring Safe AI Utilization

As the use of diverse generative AI technologies accelerates, CISOs and other security leaders must implement appropriate measures for safe and responsible adoption. Netskope offers the following recommendations:

1. Understand Generative AI Usage: Organizations should assess which generative AI tools are being utilized across the board, determining who uses them and how.

2. Strengthen Management of Generative AI Applications: Establish and enforce policies allowing only approved generative AI applications within the organization, alongside strong blocking capabilities and real-time user guidance.

3. Thoroughly Manage Local Environments: If organizations operate generative AI infrastructure locally, they should review and apply security frameworks related to large language model applications, such as OWASP Top 10, ensuring suitable protection for data, users, and networks connecting to local generative AI infrastructure.

4. Continuous Monitoring and Awareness: Ongoing monitoring of generative AI usage within organizations is essential, keeping up with new shadow AI detections, changes in AI ethics or regulations, and emerging hostile threats.

5. Assess Risks of Agent-Based Shadow AI: Identify departments spearheading the adoption of agent-based AI, collaborating to formulate practical policies to circumscribe shadow AI usage.

The full report can be accessed here. This article is based on a press release published on August 4, 2025, US time.

About Netskope Threat Labs

Home to some of the industry's leading cloud threat and malware researchers, Netskope Threat Labs is dedicated to discovering, analyzing, and designing defenses against the latest cloud threats impacting enterprises. Through proprietary research and detailed analysis, the lab works to protect Netskope’s customers from malicious threat actors, contributing to the global security community through research, advice, and best practices. The lab is led by security researchers and engineers with extensive experience in establishing and managing companies worldwide, including Silicon Valley. Regularly participating in prestigious security conferences such as DefCon, BlackHat, and RSA, the lab's members are active speakers and volunteers within the community.

About Netskope

Netskope leads the industry in cutting-edge security and networking technology, offering optimized access and real-time context-based security, regardless of where people, devices, or data are located. Thousands of customers, including over 30 Fortune 100 companies, rely on the Netskope One Platform, Zero Trust Engine, and powerful NewEdge network. These solutions allow organizations to visualize their usage across all clouds, AI, SaaS, web, and private applications, realizing security risk reduction and improved network performance. For more information, please visit netskope.com/jp.

Media Inquiries

For inquiries, please contact:

Netskope Public Relations Office

(Next PR LLC)

TEL: 03-4405-9537

FAX: 03-6739-3934

E-mail: [email protected]

Topics Business Technology)

【About Using Articles】

You can freely use the title and article content by linking to the page where the article is posted.

※ Images cannot be used.

【About Links】

Links are free to use.