Netskope's Latest Report Reveals Rising Data Leak Risks in Healthcare Amid AI Growth

Rising Data Leak Risks in Healthcare

Netskope Threat Labs, a leader in security and networking research, has unveiled a critical report focusing on data leak risks within the healthcare industry, a realm increasingly influenced by cloud-related threats. The study reveals alarming trends regarding the unauthorized upload of sensitive data to non-compliant websites and cloud services by healthcare employees. With generative AI applications like ChatGPT and Google Gemini becoming commonplace, these violations of data policies are on the rise.

Key Findings of the Report

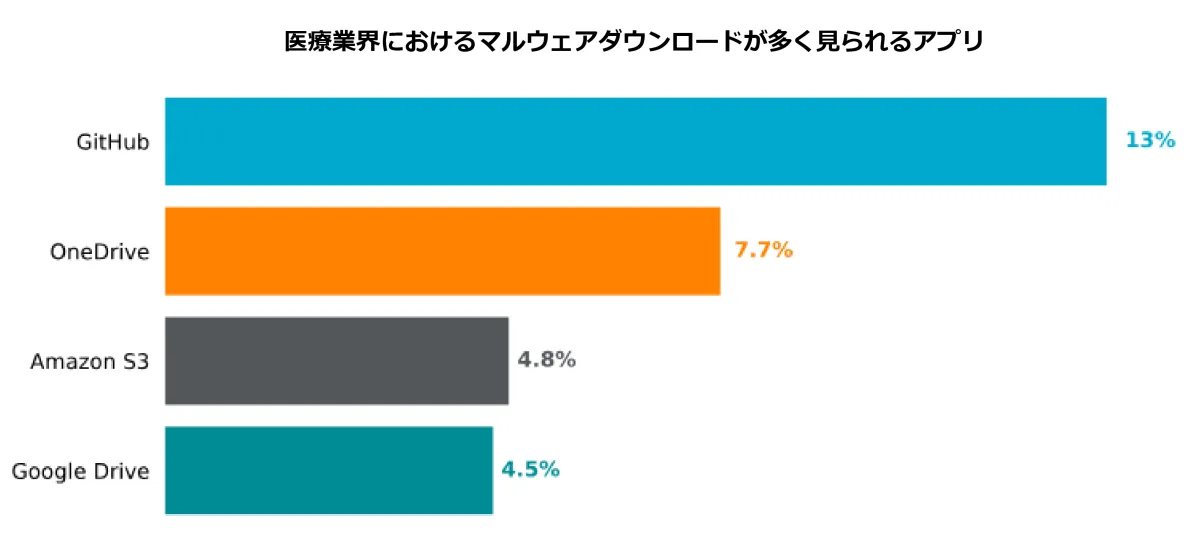

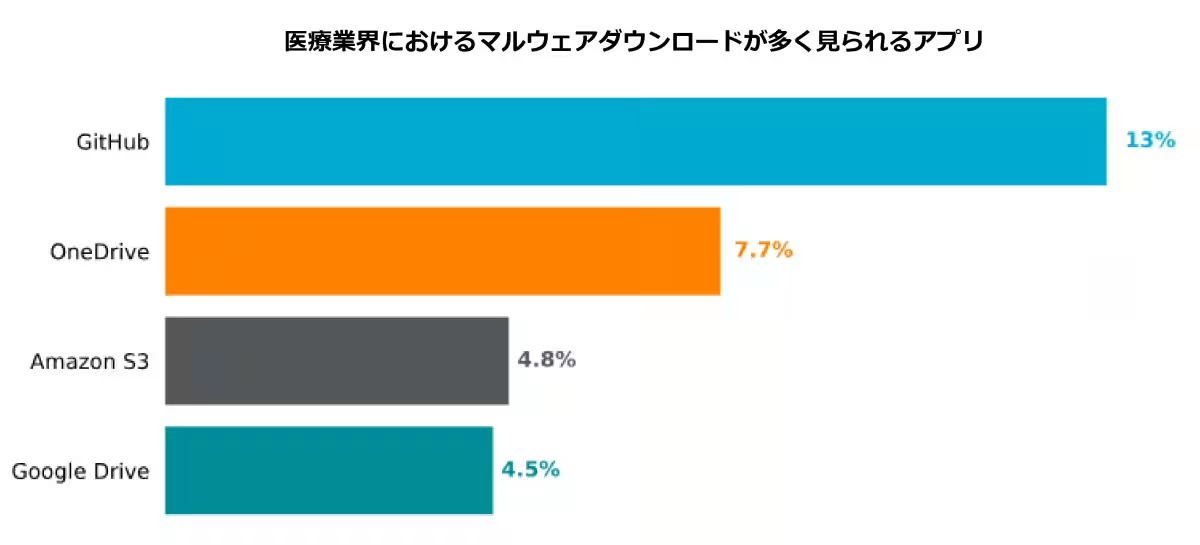

The report revealed that a staggering 81% of data policy violations in healthcare institutions over the past year pertained specifically to regulated medical data. This data category is protected under various regional, national, or international regulations and typically includes high-sensitivity medical and clinical information. Additionally, leaks of passwords, keys, source codes, and intellectual property accounted for 19% of violations, with many incidents arising from employees uploading sensitive data to personal Microsoft OneDrive or Google Drive accounts without proper authorization.

The penetrative growth of generative AI applications within healthcare has led to 88% of medical organizations now adopting such technologies. However, the use of these applications by healthcare professionals has often coincided with numerous data policy violations. Breakdown of these violations indicates that 44% involved regulated data, 29% related to source code, 25% to intellectual property, and 2% to credentials like passwords and keys. Moreover, applications that leverage training data for AI models or incorporate generative AI capabilities in operational tools pose additional data leak risks, being utilized by 96% and 98% of healthcare institutions, respectively.

The situation is dire as over two-thirds of generative AI users in the healthcare sector have admitted to transmitting sensitive data to personal AI accounts at work. This behavior compromises visibility for security teams, thereby impairing their ability to detect and prevent data breaches effectively, especially in the absence of sufficient data protection safeguards.

Security Implications and Recommendations

Gianpietro Cutolo, a cloud threat researcher at Netskope Threat Labs, stresses the dual nature of generative AI applications. While they offer innovative solutions, they can also open new pathways for data leaks. Particularly in the fast-paced environment of healthcare, organizations must embrace the benefits of generative AI while ensuring that robust security measures and data protection frameworks are established to mitigate risks.

To address these challenges, the report suggests several strategic actions:

1. Provisioning Approved Generative AI Applications: Providing employees with organizationally approved generative AI tools helps to centralize and control their usage within a monitored environment, reducing reliance on personal accounts and discouraging the use of 'shadow AI' services.

2. Implementing Strict Data Loss Prevention (DLP) Policies: Enforcing DLP measures to monitor and control access to generative AI applications is crucial. Such policies define permissible data types and serve as an additional safety net should employees engage in high-risk behaviors. The report found that the share of healthcare organizations applying DLP strategies to generative AI has risen from 31% to 54% over the past year.

3. Real-Time User Coaching: Instituting real-time alerts can help modify employee behaviors risky to data security. For instance, should a healthcare professional attempt to upload a file containing patient names to ChatGPT, a prompt could ask for the user’s confirmation to proceed. Studies indicate that 73% of employees across industries are likely to halt their actions when confronted with such warnings.

As generative AI applications proliferate and cloud usage surges, protecting regulated medical data becomes imperative within the healthcare sector. Gianpietro Cutolo asserts the urgent need for organizations to enhance their DLP measures and restrict application usage. Although healthcare entities are navigating these adjustments, a sustained focus on secure, approved solutions remains essential to safeguarding sensitive data in this dynamically evolving landscape.

Netskope currently protects millions of users from cyber threats, including data leaks. The insights shared in the report are derived from anonymized usage data collected through the Netskope One platform, with contributions from consenting healthcare clients. For the complete report, please refer to Netskope Threat Labs.

About Netskope

Netskope stands at the forefront of the cybersecurity and networking industry, delivering optimized access and real-time context-based security for users, devices, and data, no matter where they are located. Trusted by many, including over 30 Fortune 100 companies, Netskope's One platform, zero-trust engine, and powerful NewEdge network empower organizations to visualize cloud, AI, SaaS, web, and private application usage while enhancing security risk reduction and network performance.

Topics Health)

【About Using Articles】

You can freely use the title and article content by linking to the page where the article is posted.

※ Images cannot be used.

【About Links】

Links are free to use.