The Rise of Shadow AI: A Deep Dive into Corporate Use of Generative AI Tools

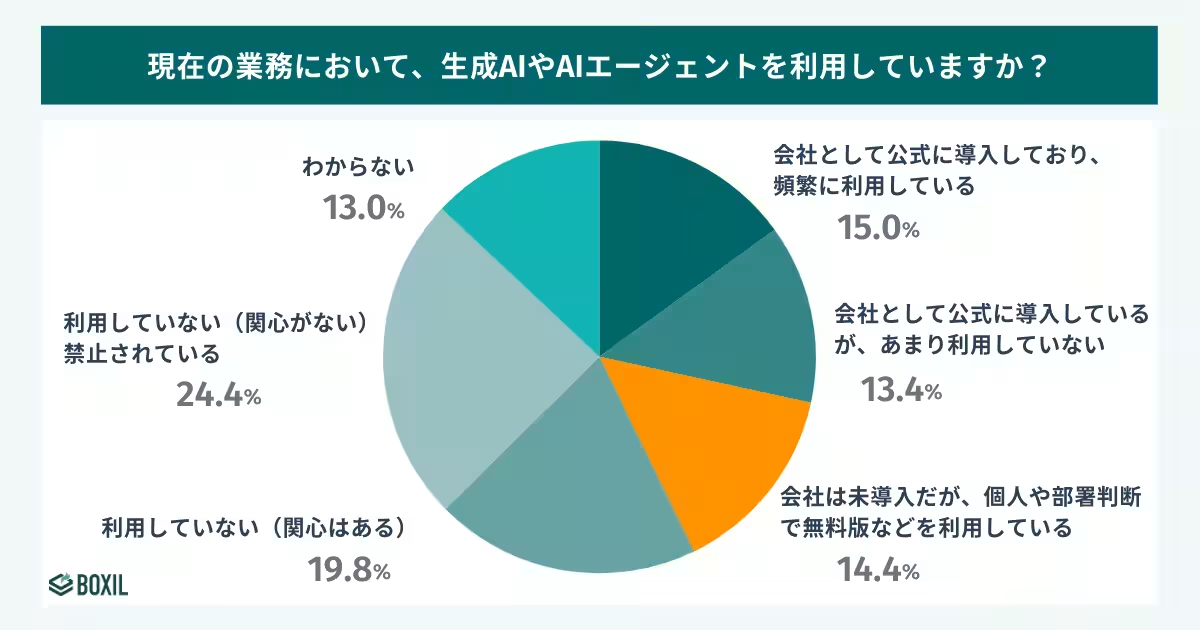

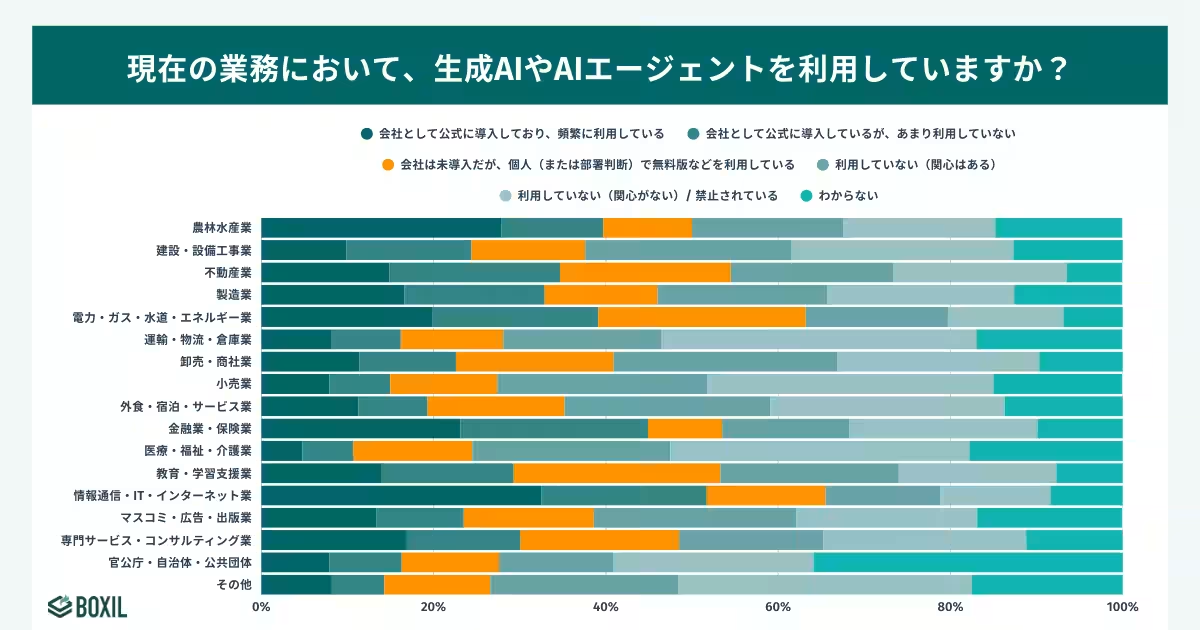

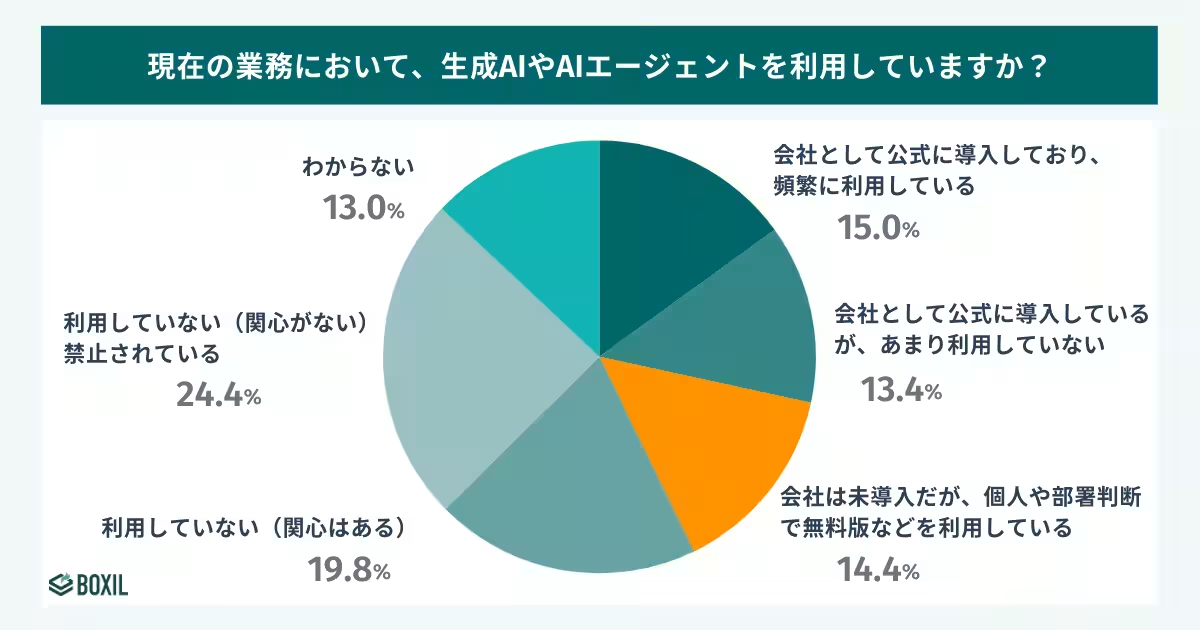

In a significant survey conducted by Smartcamp Inc., operators of the SaaS comparison site BOXIL, it was found that 28.4% of companies have officially adopted generative AI tools such as ChatGPT and Gemini. However, the study also exposed a growing trend of 'shadow AI', where 14.4% of respondents indicated that they utilize freely available AI tools without their company's authorization. This alarming trend highlights the inadequacies in corporate structures and policies governing AI use.

The survey included responses from 1,365 employees across various companies in Japan. Among these respondents, 28.4% confirmed their companies have formally integrated generative AI tools, with 15.0% frequently using them and another 13.4% reporting limited usage. In stark contrast, a notable fraction of employees—14.4%—reported utilizing unofficial AI tools for work-related tasks, pointing to a significant gap in corporate governance and employee understanding of AI risks.

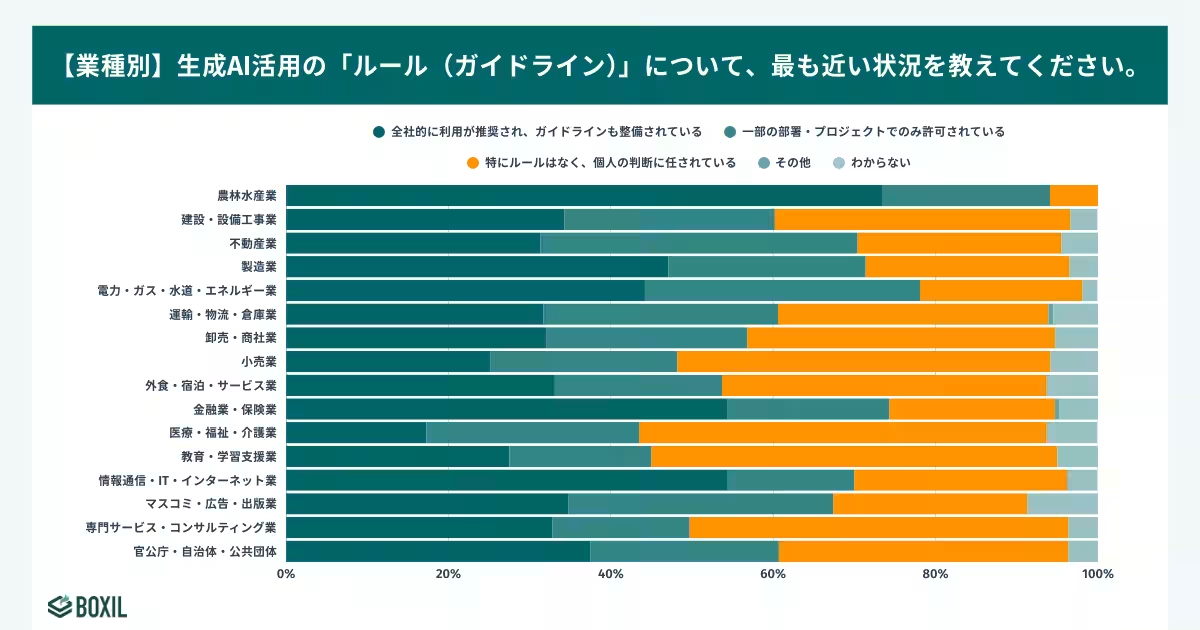

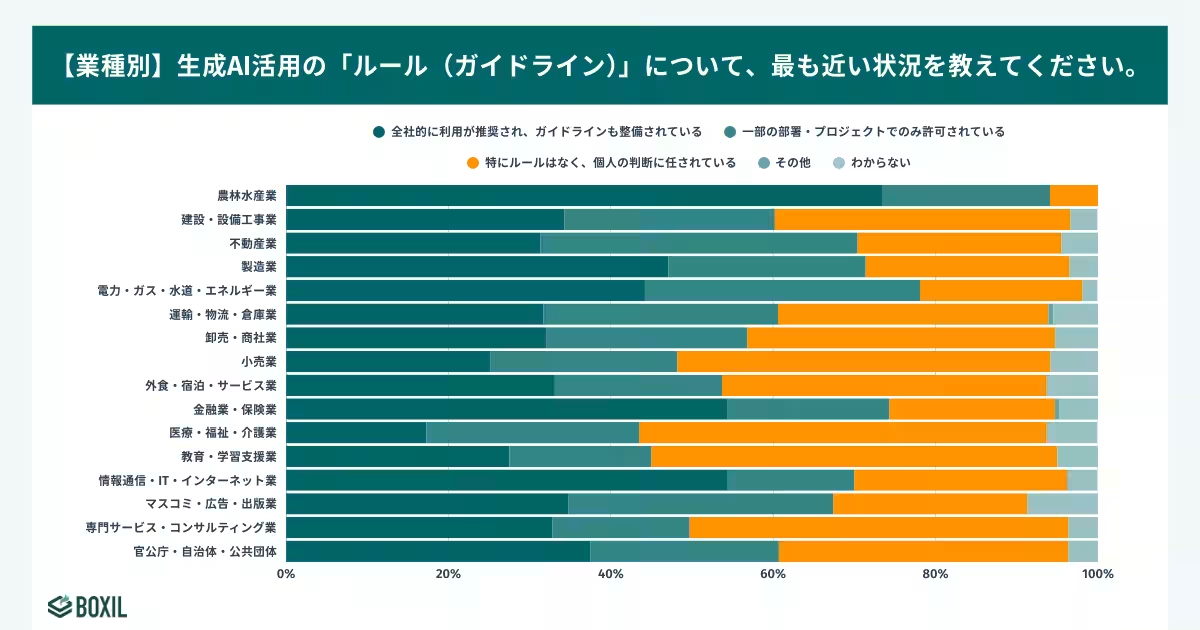

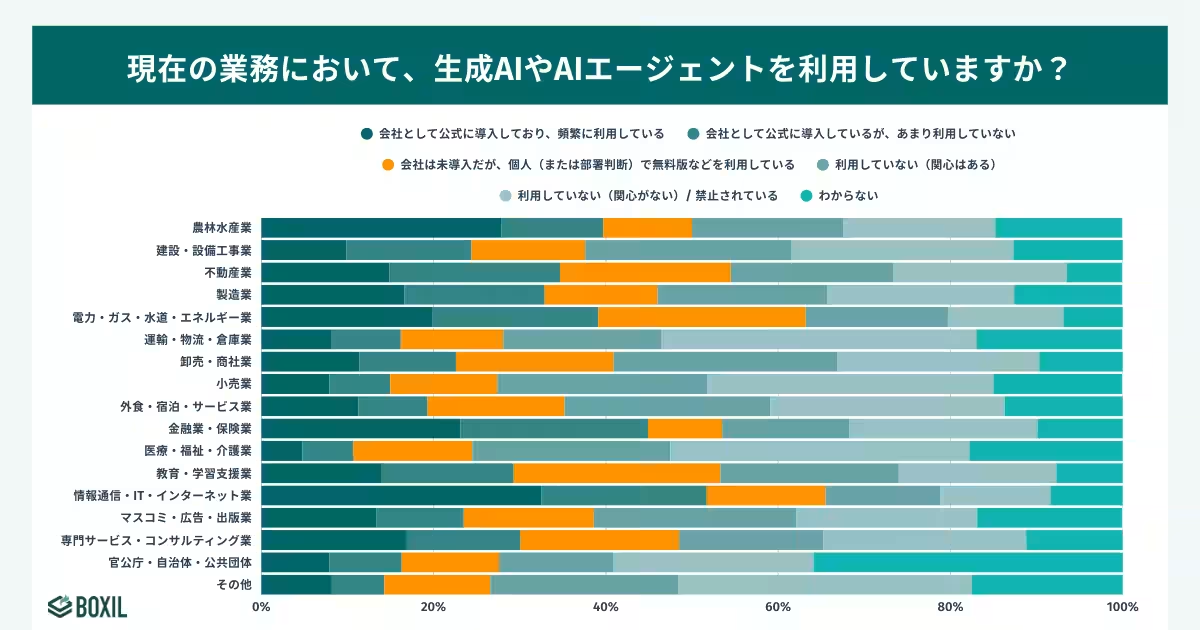

One of the most striking findings of the survey was that 31.9% of the respondents stated there were no specific guidelines in place regarding the use of generative AI in their workplaces. This lack of regulation leads to a reliance on individual judgment, which can jeopardize data security and governance. It is particularly concerning that in industries such as education, energy, and real estate, the rates of personal use outpace official adoption rates. For instance:

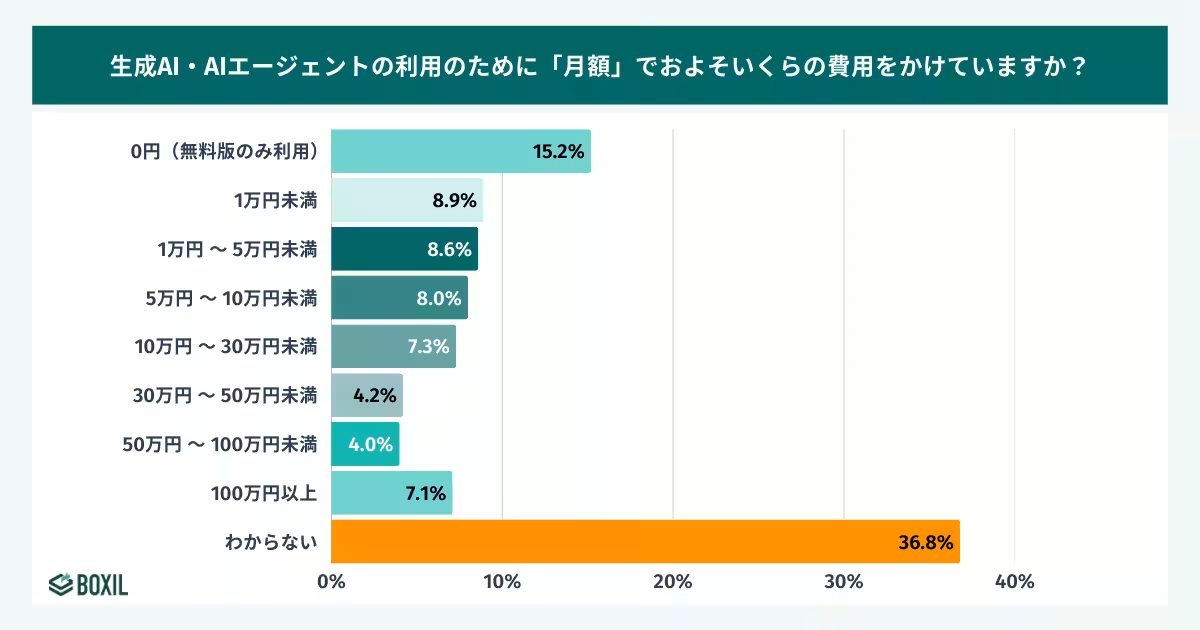

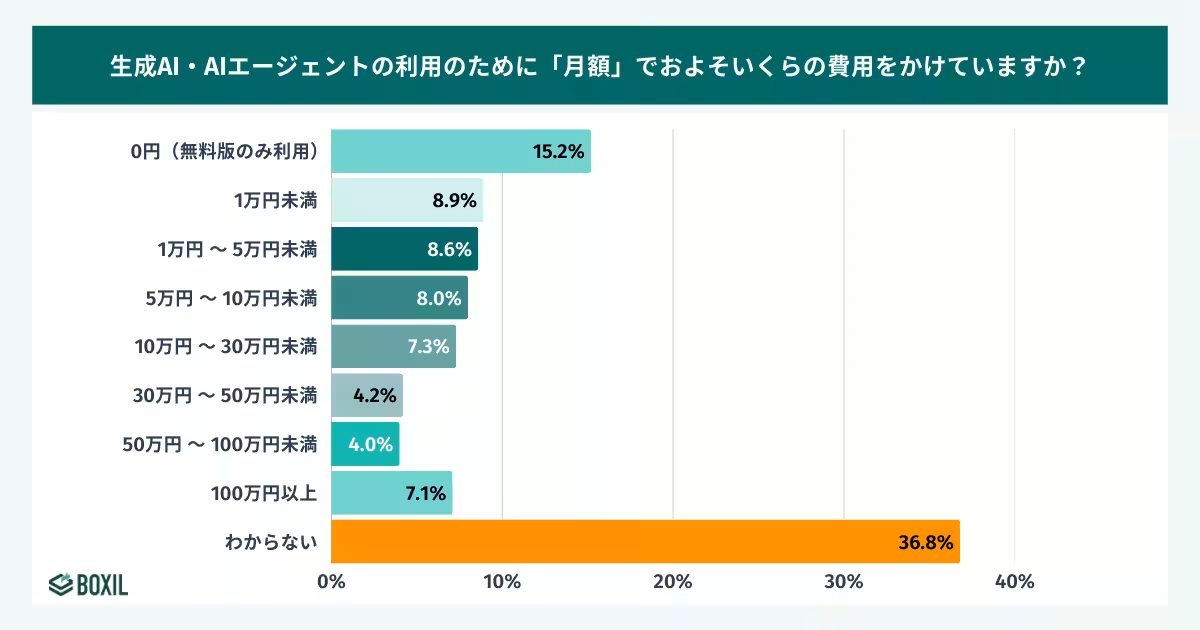

The survey also uncovered that nearly 40% of the participants were uncertain about the costs associated with AI tool usage. In terms of governance, the lack of financial transparency is as troubling as the absence of operational guidelines. With 36.8% of respondents admitting they did not know how much their organizations were spending on AI tools, it highlights a severe lack of oversight.

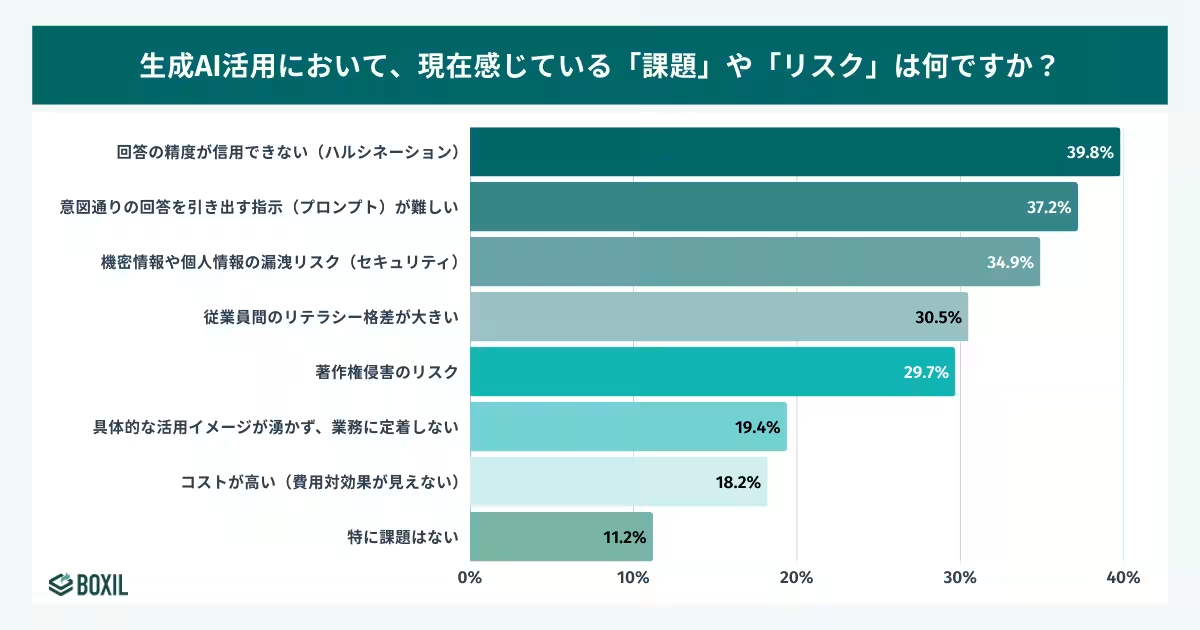

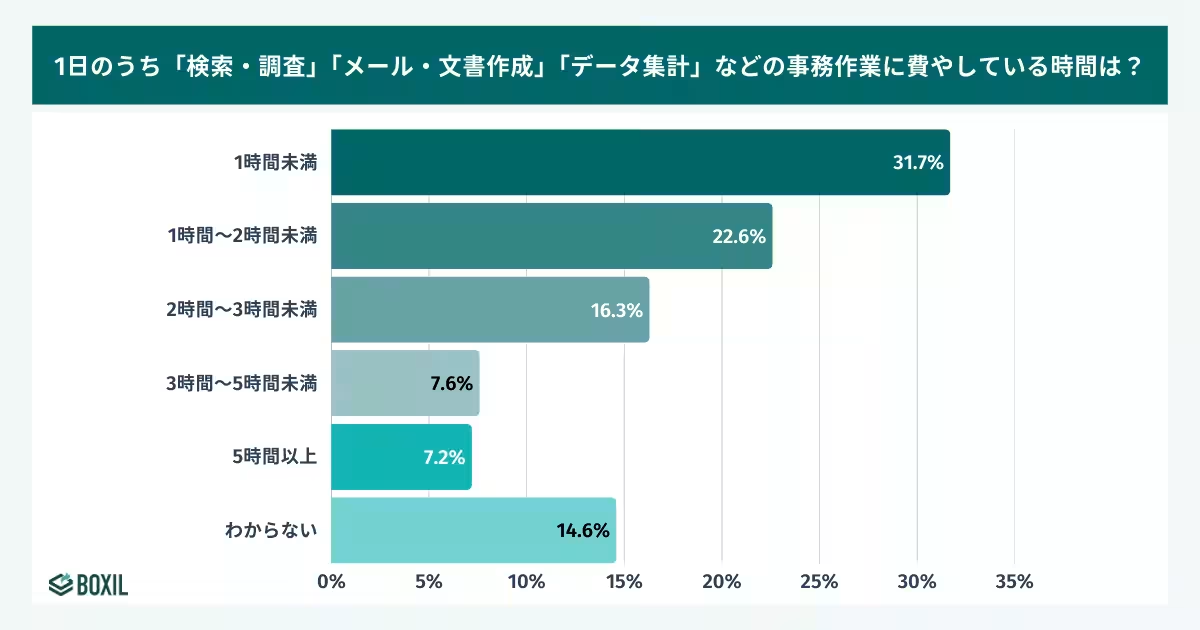

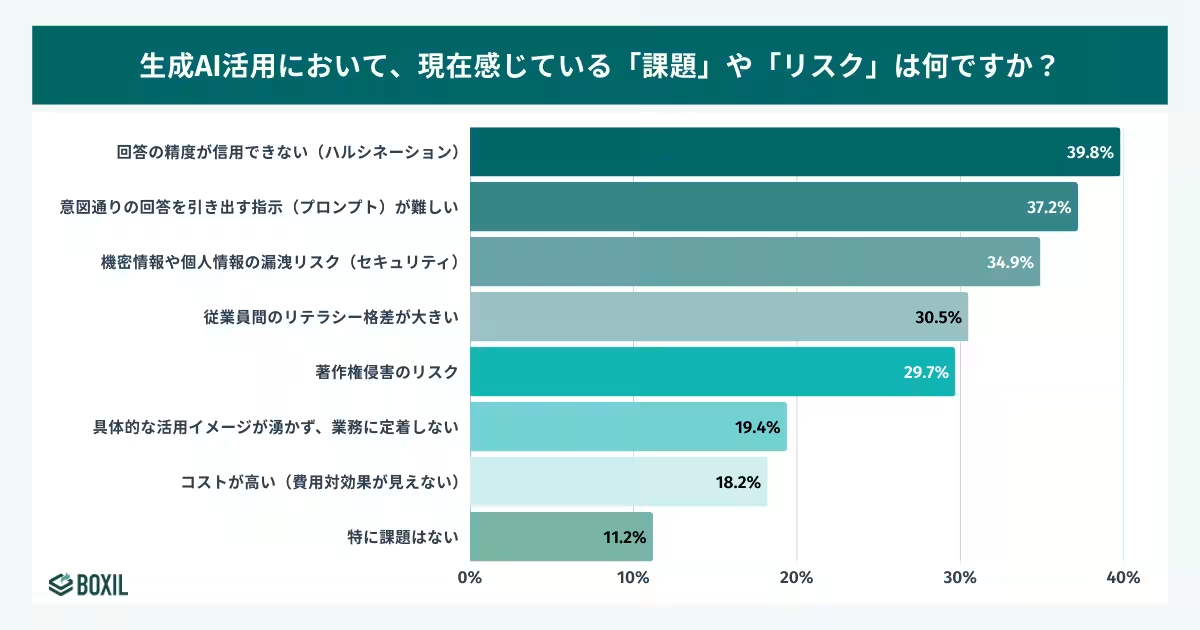

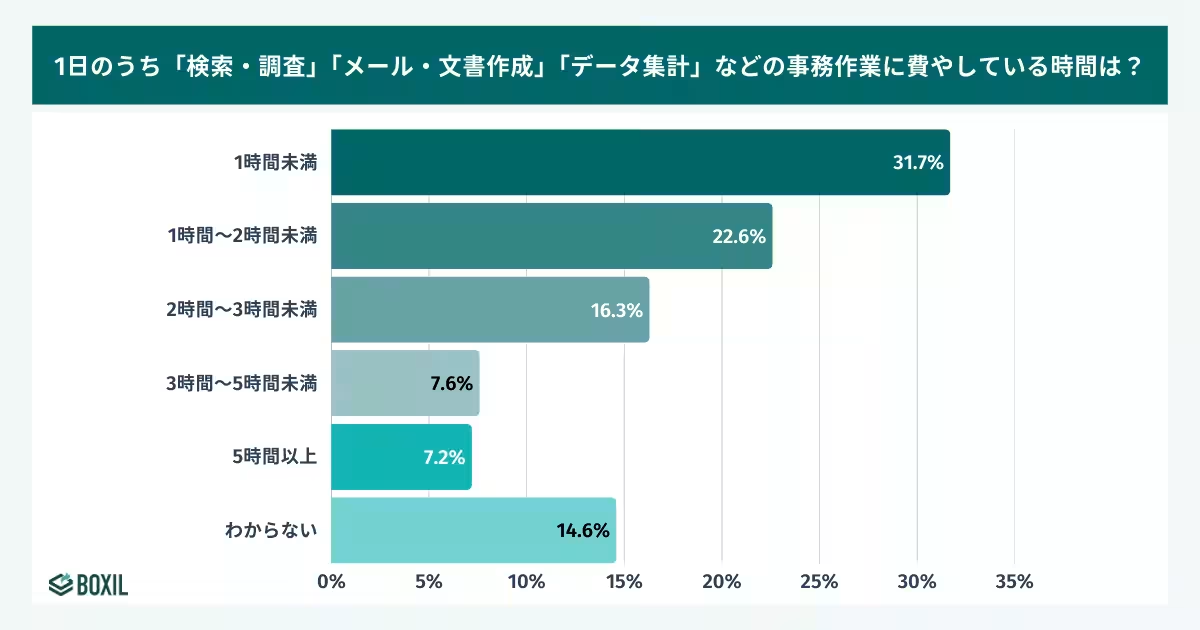

Respondents identified the risk of data breaches as a top concern, reflecting a growing awareness of the vulnerabilities associated with informal AI use. Despite realizing the potential dangers, employees continue to prioritize efficiency, often performing two or more hours of administrative tasks daily. This paradox illustrates a conflict where the immediate benefits of automation overshadow the long-term risks of security breaches.

The survey presents a compelling case for organizations to reassess their AI strategies and governance frameworks. Companies need to develop comprehensive guidelines that address not only the ethical use of AI but also ensure that employees are trained adequately to harness the power of generative AI responsibly. With the rapid rise of AI technologies, organizations must find a balance between innovation and risk management.

For further insights and detailed findings from the survey, please visit BOXIL's official page. It is crucial for organizations to engage in discussions about the implications of generative AI and to create an environment that encourages safe and innovative use of these transformative tools.

Current State of Generative AI Adoption

The survey included responses from 1,365 employees across various companies in Japan. Among these respondents, 28.4% confirmed their companies have formally integrated generative AI tools, with 15.0% frequently using them and another 13.4% reporting limited usage. In stark contrast, a notable fraction of employees—14.4%—reported utilizing unofficial AI tools for work-related tasks, pointing to a significant gap in corporate governance and employee understanding of AI risks.

The Shadow AI Phenomenon

One of the most striking findings of the survey was that 31.9% of the respondents stated there were no specific guidelines in place regarding the use of generative AI in their workplaces. This lack of regulation leads to a reliance on individual judgment, which can jeopardize data security and governance. It is particularly concerning that in industries such as education, energy, and real estate, the rates of personal use outpace official adoption rates. For instance:

- - Energy sector: 24.1% personal use vs. 19.9% official adoption.

- - Education sector: 24.0% personal use vs. 14.0% official adoption.

- - Real estate: 19.8% personal use vs. 14.9% official adoption.

Understanding Costs and Risks

The survey also uncovered that nearly 40% of the participants were uncertain about the costs associated with AI tool usage. In terms of governance, the lack of financial transparency is as troubling as the absence of operational guidelines. With 36.8% of respondents admitting they did not know how much their organizations were spending on AI tools, it highlights a severe lack of oversight.

Respondents identified the risk of data breaches as a top concern, reflecting a growing awareness of the vulnerabilities associated with informal AI use. Despite realizing the potential dangers, employees continue to prioritize efficiency, often performing two or more hours of administrative tasks daily. This paradox illustrates a conflict where the immediate benefits of automation overshadow the long-term risks of security breaches.

Moving Forward

The survey presents a compelling case for organizations to reassess their AI strategies and governance frameworks. Companies need to develop comprehensive guidelines that address not only the ethical use of AI but also ensure that employees are trained adequately to harness the power of generative AI responsibly. With the rapid rise of AI technologies, organizations must find a balance between innovation and risk management.

For further insights and detailed findings from the survey, please visit BOXIL's official page. It is crucial for organizations to engage in discussions about the implications of generative AI and to create an environment that encourages safe and innovative use of these transformative tools.

Topics Consumer Technology)

【About Using Articles】

You can freely use the title and article content by linking to the page where the article is posted.

※ Images cannot be used.

【About Links】

Links are free to use.