Ragate Launches New Service to Optimize AI Operational Costs Significantly

Ragate's New Service to Optimize Generative AI Costs

Introduction

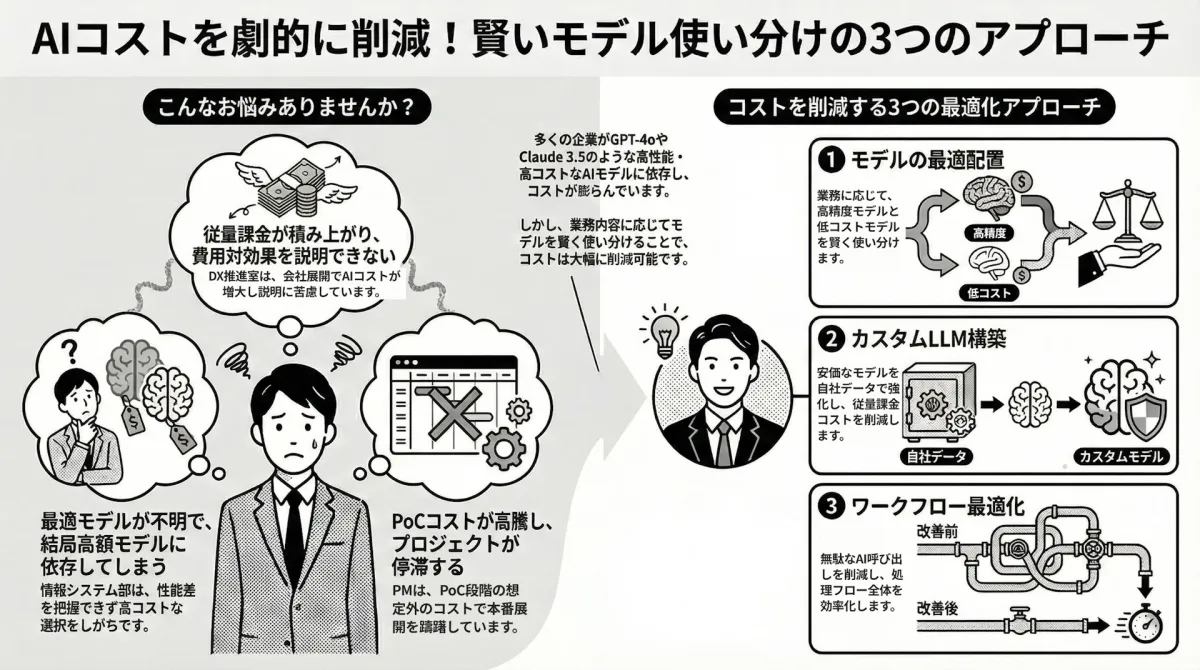

Ragate Inc., also known as Ragate, has announced the launch of an innovative service aimed at significantly reducing operational costs associated with generative AI. This new initiative, named "AI Model Smart Use Support," focuses on guiding enterprises in selecting optimal AI models tailored to specific use cases and optimizing AI workflows.

As businesses increasingly deploy generative AI across their organizations, many are encountering rising operational costs. Research shows that by 2025, about 40% of firms are expected to adopt generative AI, with over one-third planning to increase their investment budgets. Unfortunately, the pay-as-you-go pricing model from multiple vendors, such as Claude, GPT, and Gemini, has left many DX (Digital Transformation) stakeholders grappling with cost-effectiveness justifications, while information technology departments often find themselves overly reliant on high-cost models.

Considering these issues, Ragate aims to break free from the inefficient practice of applying high-performance, high-cost models uniformly across all operations. The key is understanding that the optimal model varies based on each task's complexity and required accuracy, allowing companies to maintain operational quality while substantially reducing costs.

Service Features

The newly launched service centers around three core approaches, ensuring a comprehensive strategy to enhance cost efficiency:

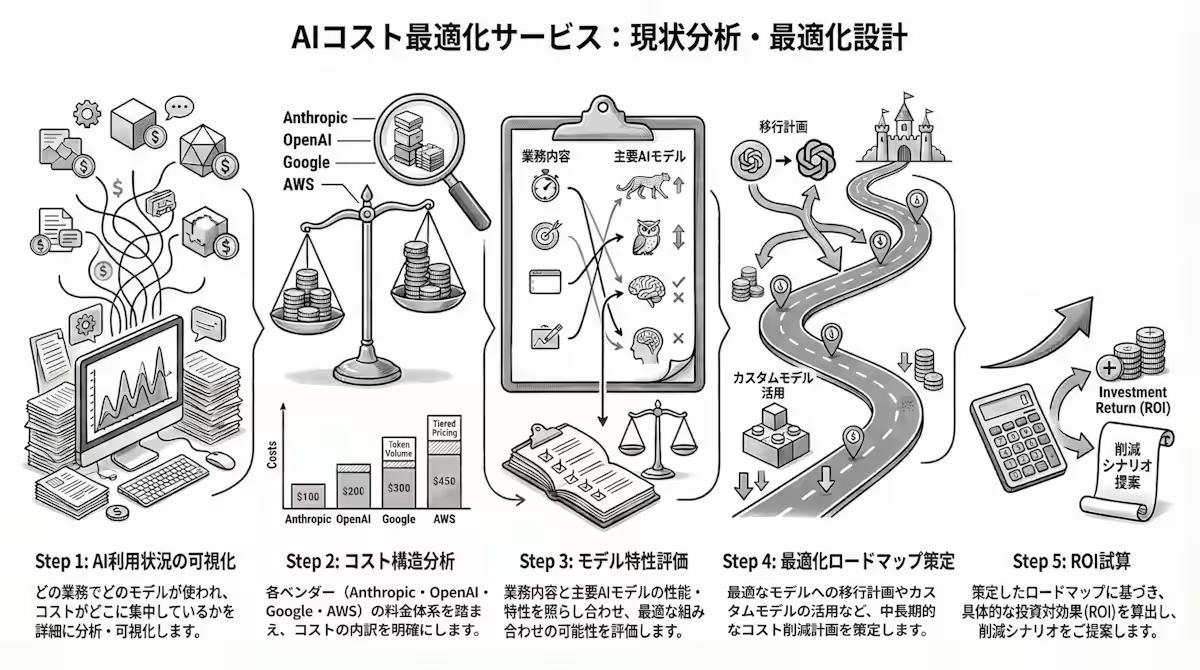

1. Multi-vendor Model Design

Ragate’s expert team is well-versed in the unique characteristics of various AI models offered by top vendors, enabling them to select the most suitable options based on specific tasks, consequently reducing dependency on pricey models. Key initiatives include:

- - Analyzing business characteristics to achieve optimal model placement

- - Providing cost simulations tailored to specific use cases

- - Employing high-precision models such as Claude or GPT for complex tasks while leveraging more economical models like Amazon Nova or Titan for routine processes.

2. Custom LLM Development for Cost Reduction

The service also emphasizes the importance of custom LLM (Language Learning Model) development:

- - Sourcing optimal open models from HuggingFace and fine-tuning models based on Amazon Nova and Titan to enhance AI accuracy.

- - Securely hosting with AWS SageMaker, transitioning from pay-as-you-go pricing to fixed-cost structures, resulting in significant long-term savings.

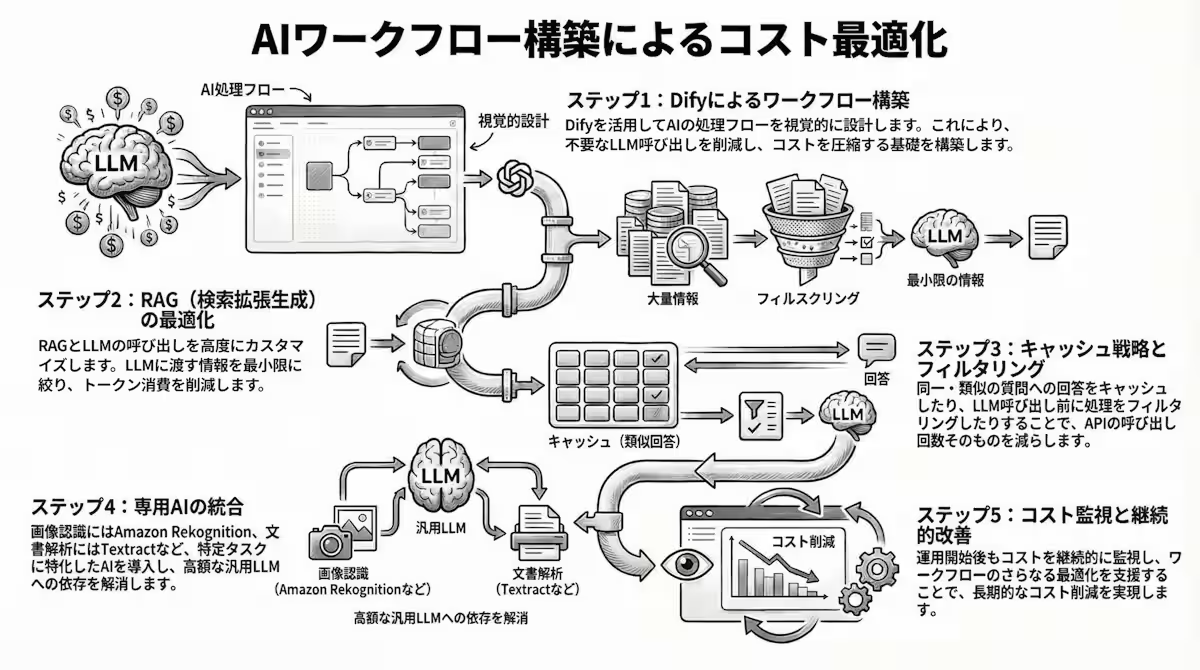

3. AI Workflow Optimization

Improving AI workflows with Dify is another critical aspect, aimed at minimizing unnecessary LLM calls to streamline operations:

- - Implementing advanced workflows that leverage Dify

- - Utilizing efficient information retrieval techniques combined with caching strategies based on RAG (retrieve-and-generate)

- - Integrating dedicated AI systems for specific tasks, such as image recognition with Amazon Rekognition or document analysis with Amazon Textract.

Specific Support Structure

Ragate's service encompasses the entire process of generative AI cost reduction, from use case analysis to optimal model deployment. The support is divided into three phases:

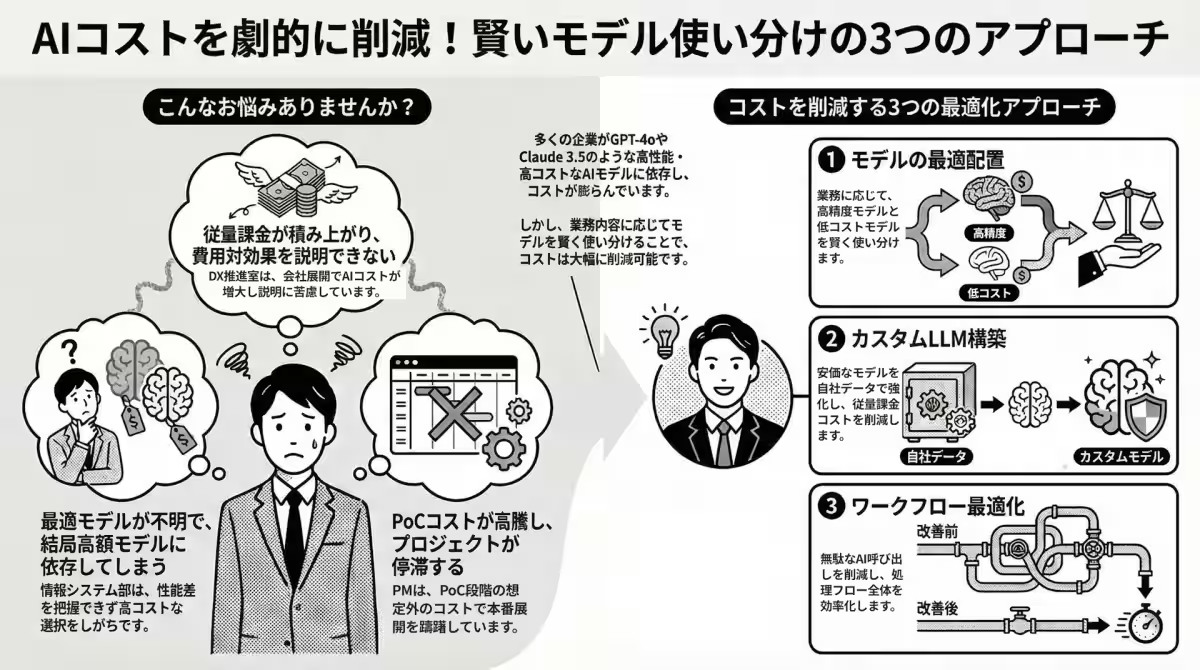

Phase 1: Current Analysis and Optimization Design

- - Analyzing existing AI utilization to formulate a cost optimization roadmap tailored to businesses and usages, featuring:

- Cost structure analysis

- Evaluation of vendor-specific model characteristics

- Development of an optimization roadmap

- ROI estimation.

Phase 2: Model Optimization and Development

- - Tailoring model selection based on business specifics and establishing custom LLM with proprietary learning data which includes:

- Sourcing and assessing HuggingFace models

- Executing fine-tuning initiatives

- Setting up hosting environments with SageMaker

- Introducing dedicated AI such as Rekognition.

Phase 3: Workflow Construction

- - Building AI workflows using Dify to optimize LLM calls and compress costs effectively, including:

- Designing optimized caching strategies with RAG

- Integrating dedicated AI solutions

- Implementing cost monitoring and continuous improvement systems.

Conclusion and Future Outlook

As enterprises dive deeper into generative AI, the shift from merely adopting the technology to efficiently operating it becomes increasingly clear. Implementing a multi-vendor strategy allows for cost optimization and mitigates risks associated with vendor lock-in. Ragate's "smart usage" approach goes beyond simple cost reduction, positioning itself as a strategic initiative to sustain generative AI utilization.

The workflow optimizations through Dify not only decrease LLM calls but also foster a culture of visibility and continuous improvement within organizations. This enables businesses to adapt to the rapidly evolving landscape of generative AI by integrating the latest optimization techniques continually.

Ragate is committed to leveraging its AWS FTR certified team’s expertise to help Japanese enterprises harness generative AI from a technological standpoint, ensuring a collaborative problem-solving approach that emphasizes continuous knowledge retention even after project completion.

Call to Action for Companies Facing AI Cost Challenges

Are you struggling with high operational costs in generative AI? Whether you want to reduce dependency on expensive models, clarify the cost-effectiveness of AI operations, or have faced inflated costs during the PoC phase preventing full deployment, we invite you to reach out for guidance.

For further details and inquiries about our service, please visit Ragate's Service Page.

Topics Consumer Technology)

【About Using Articles】

You can freely use the title and article content by linking to the page where the article is posted.

※ Images cannot be used.

【About Links】

Links are free to use.